What is Big O and not Big Bang?

We all know that the Big Bang is what started this universe we live in, but Big O is not connected in any sense with it. There is a lot of fuss about Big O these days. Accion’s CEO Kinesh always says, if your software code works and does all the expected things then it is no longer sufficient. What carries more value is it’s response time, performance, scalability, and throughput.

Big O notation is used in computer science to describe the performance or complexity of the code/algorithm. This era is of big data and microservices, where every CPU cycle counts, every system is connected with some other system in the ecosystem, even a minute delay caused in a component or piece of code might end up slowing or shutting down the overall software.

The software industry has matured over a period of 2 decades. Gone are the days of the waterfall and everything has moved to agile. If the code does not have any bugs and it is not able to perform well it will cause a delay in response then it's of no use. With a small set of data, any system can work, but as data increases, performance degrades, if the system is not designed taking Big O matrix into consideration.

Let me give you a real-life example to explain Big O. I gave a box of donuts to my daughter Swasti and her friend, they finished the first donut as soon as it was given, maybe 5 sec max ;-), 2nd donut took 10 sec, 3rd took 5 mins and 4th took her 10 mins or more. As the number of donuts increased the overall eating time/performance degraded. Big O analysis helps in taking the correct decision to ensure robust performance-related time and space complexity.

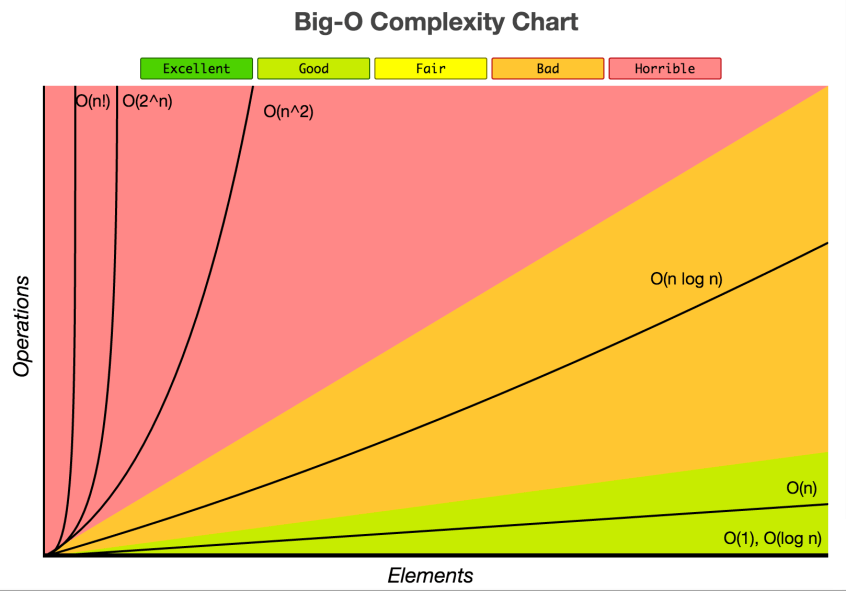

Here is a diagram:

Let me explain to you a use case “Password Blacklist” security feature which I have architected and implemented for one of our prestigious clients (I shared a demo of it during the Accion innovation summit in Goa). Basically, we want to prevent customers from using compromised password data, hence the user creates the account, we need to validate that the password supplied by users is not part of a compromised password of a data set of 1.2 billion hashed password. We were committed to create a user within 1 sec and respond to the client so that other micro-services can consume it, but handing big data with sub-second response was not easy. I had to do the Big O analysis of each component involved and designed it to scale with increasing data and finally, I was successful to implement it, to yield consistent performance with a sub-second response even with billion records in DynamoDB.

If we do not consider the Big O factor/performance while designing, then soon all our software will strait showing degraded performance and even might stop working with data increasing at the exponential rate. Accion labs offer services to optimize the performance of the client’s software to meet the requirement to perform with rapidly increasing data.